I’m happy to see so many software developers who are enthusiastic about Agile methods. It’s nice when people enjoy their work. However, I think there is a problem: Some teams are giving too much emphasis to Project Management activities, while at the same time they appear to have forgotten some basic Software Engineering practices. In this post I will present some examples of this lack of balance between the two.

Drawing Burn-down Charts vs. Drawing UML Diagrams

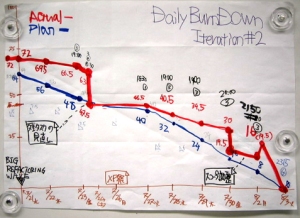

Burn-down charts help us track the progress being done. They present clearly how much work has been completed, and how much work is left until the end of an iteration. By taking a look at the chart, it’s easy to know if the project is behind or ahead of schedule. But the burn-down chart tells us nothing about the quality of the software being produced, and neither has it any value after the iteration has been completed. Burn-down charts are a project management artifact, they are not a deliverable of the software development process.

Burn-down charts help us track the progress being done. They present clearly how much work has been completed, and how much work is left until the end of an iteration. By taking a look at the chart, it’s easy to know if the project is behind or ahead of schedule. But the burn-down chart tells us nothing about the quality of the software being produced, and neither has it any value after the iteration has been completed. Burn-down charts are a project management artifact, they are not a deliverable of the software development process.

UML diagrams are an essential part of the software development process. They are required by the person doing the design to conceive and compare several alternatives. They are needed to present these alternatives and allow design reviews. They serve as part of the documentation of the code that is actually implemented. Drawing UML diagrams is a software engineering activity, and these diagrams are part of the deliverables of the software development process.

However, it seems that some Agile programmers invest very few time drawing UML diagrams. Perhaps they think that these diagrams are only needed in Big Design Up Front, and not when you do Emergent Design or TDD. In my opinion this is a mistake. If you spend more time drawing burn-down charts than UML diagrams, then you are doing more project management than software engineering.

Estimating User Stories vs. Computing Algorithms Complexity

In an Agile project the User Stories are the basic unit of work, and it is very important to estimate the effort that will be required to implement these stories. Techniques such as the Planning Poker were invented to make this estimation process as accurate as possible, since iterations are very short and there is not much margin for errors. However, the time spent in effort estimation does not affect the quality of the software. Effort estimation is a project management activity.

In an Agile project the User Stories are the basic unit of work, and it is very important to estimate the effort that will be required to implement these stories. Techniques such as the Planning Poker were invented to make this estimation process as accurate as possible, since iterations are very short and there is not much margin for errors. However, the time spent in effort estimation does not affect the quality of the software. Effort estimation is a project management activity.

Professional software developers must be able to compute the complexity of the algorithms they are adopting in their systems. Actually, they should base their design decisions on the different time and space complexities of diverse algorithms and data structures. They should understand the differences between best, worst, and average case complexities. Computing algorithm complexity is a software engineering activity, essential to assure the quality of the systems being developed.

However, it seems that some Agile programmers don’t ask themselves many questions about the complexity of the algorithms they are using. They just go to the library and choose the Collection with the most convenient API. Or they always choose the simplest possible solution, because of KISS and YAGNI. But if you spend more time estimating user stories than computing time and space complexity, then you are doing more project management than software engineering.

Measuring Velocity vs. Measuring Latency and Throughput

In Agile projects, the team members are constantly measuring their Velocity: How many units of work have been completed in a certain interval. Of course people want to be as fast as possible, assuming that your velocity is an indication of your productiveness, efficiency and efficacy as a software developer. But the velocity gives us no indication of the quality of the software being produced. On the contrary, it is common to see Agile teams working very fast on the first iterations just to slow down considerably when they start doing massive Refactorings. Thus measuring the Velocity is just a project management activity with dubious meaning.

In Agile projects, the team members are constantly measuring their Velocity: How many units of work have been completed in a certain interval. Of course people want to be as fast as possible, assuming that your velocity is an indication of your productiveness, efficiency and efficacy as a software developer. But the velocity gives us no indication of the quality of the software being produced. On the contrary, it is common to see Agile teams working very fast on the first iterations just to slow down considerably when they start doing massive Refactorings. Thus measuring the Velocity is just a project management activity with dubious meaning.

Many software developers today are working on client/server systems such as Web sites and Smartphone Apps. These systems are based on the exchange of requests and responses between a client and a server. In such systems, the Latency is the time interval between the moment the request is sent and the moment the response is received. The Throughput is the rate the requests are handled, i.e., how many requests are responded per unit of time. In client/server systems it is essential to constantly measure the latency and the throughput. A small code change, such as making an additional query to the database, may have a big impact on both.

However, it seems that some Agile programmers are constantly measuring their velocity, but they have absolutely no idea of what are the latency and throughput of the systems they are developing. They may ignore non-functional requirements and choose simplistic design alternatives that have a huge negative impact in the system’s performance. Thus if you measure your own velocity more frequently than you measure latency and throughput, you are doing more project management than software engineering.

Meetings and Retrospectives vs. Design and Code Reviews

Agile projects normally have daily meetings to discuss how the team is making progress: What have been done, what will be done, are there any obstacles. These meetings do not include any technical discussion. Projects may also have retrospective meetings after each iteration, with the goal to improve the process itself. These meetings are project management activities, they do not address software quality issues.

Agile projects normally have daily meetings to discuss how the team is making progress: What have been done, what will be done, are there any obstacles. These meetings do not include any technical discussion. Projects may also have retrospective meetings after each iteration, with the goal to improve the process itself. These meetings are project management activities, they do not address software quality issues.

Design and code reviews focus on software quality. In a design review, an alternative should be chosen based on non-functional quality attributes such as extensibility and maintainability. Code reviews are an opportunity to improve the quality of code that should be already correct. Code reviews should also be used to share technical knowledge and make sure that the team members are really familiar with each other’s work. These kinds of reviews are software engineering activities, with a direct effect on the deliverables of the software development process.

However, it seems that some Agile programmers focus too much on the process and not enough on the product. Successful software development is much more than having a team managing itself and constantly improving its processes. If the quality of the software is decaying, if technical debt is accumulating, if there is too much coupling among modules and if the classes are not cohesive, then the team is not performing its goal, no matter how efficient the process seems to be. If you are spending more time in stand-up and retrospective meetings than on design and code reviews, then you are doing more project management than software engineering.

In Summary

Drawing burn-down charts, estimating user stories, measuring your velocity and participating in retrospectives are project management activities. Drawing UML diagrams, computing the complexity of algorithms, measuring latency and throughput and participating in design reviews are software engineering activities. If you are a software engineer, you should know what is more important. Don’t let your aspiration to become a Scrum Master make you forget the Art of Computer Programming. Good luck!

I agree with a lot of what you have written. There are two main issues here. First, Agile processes (e.g. Scrum, XP, Kanban) emphasize emergent design rather than up front design. Write something quickly, show it to the customer and stakeholders and iterate.

Second, Agile processes are prescriptive unlike Waterfall processes. As you say, Agile processes involve many energy consuming rituals. Some, like scrums, are daily activities and others, like XP’s user stories and test driven development, even dictate how the system is developed.

With all this, why bother designing anything upfront when you are just going to rewrite it anyway? Good unit tests allow refactoring with impunity. Working closely with stake holders (Scrum’s “Product Owner”) and frequent small iterations means any deviation can be quickly and easily fixed, right?

While Agile processes help, they are not a substitute for experience, as you say. Focusing on purely customer facing features (i.e. functional requirements), as Scrum and other Agile processes encourage, can lead to expensive rewrites and unhappy customers when unarticulated or assumed non-functional requirements are missed. A focus on the code instead of more accessible forms of documentation can alienate the non-coders, too.

The biggest problem is that architects/engineers have to adapt. In waterfall processes, an architect’s place was safe and understood. Now an architect is forced to justify his or her place. Instead of taking time to design and think through requirements and designs, developers expect to start coding next week. Designs are expected to change frequently, possibly every day.

Agile processes have their place but, like poor “ivory tower” architects and engineers, they can have their downsides or be misused. Poor architects and engineers wilt under this pressure. Good architects prioritize, emphasize their experience and demonstrate how a different focus can benefit the project and the team.

Thanks for your comment, Anthony! I agree with you that architects must adapt to Agile, incremental software development. In my opinion most architects are able to identify the current deficiencies in simplistic approaches, but they not always are able to propose an acceptable alternative. My intention with Adaptable Design Up Front (ADUF) is exactly to offer such an option that may be adopted by Agile practitioners.

Yes, the process has become more important than the product. The product is quality software that meets the requirements and hence the needs of the stakeholders/customers. This can only be achieved by having quality requirements, quality design, quality coding and quality testing. The process, be it Agile, Waterfall, Waterfall with feedback, Spiral, or any of many others does not answer the question of what makes, quality requirements, what makes quality design and code and what makes quality testing.

I have almost 50 years in the real-time software industry working on aircraft systems, submarine systems, satellite systems and many others. The problems and in some cases failures of these systems was not in the process itself, but in the technical execution of the process. The requirements did not meet the definition of true software requirements, the design was slipshod or worse, the testing was completely inadequate, etc.

Introducing a new process does not address the failures of technical execution at all. Introducing a new process without changing and improving the technical execution does not have any impact on the delivered product.

I have personally observed the introduction of an Agile approach without changing the technical execution and the result was the same low quality software, delivered late and over budget. The hoped for improvement in the development of software systems will not come from a change in the process or improved management, it will come from the improvement of our technical execution in the steps of the process, whether it is Agile or any other.

It is time that we grow up as engineers and develop proper requirements, design, code and testing execution and maybe will see true improvement in our profession.

I would also like to comment on what seems to me to be a denigration of the waterfall process. You cannot escape the waterfall. You must have requirements before you can start developing a system, you must design a solution before you code and you must code before you can test the solution. Whether this is done for an iteration, an increment, or the entire system is not germane, you must have a waterfall at some level of your development.

Regarding documentation I have been accused of being both document happy and document averse simultaneously. I believe that documentation that captures our thought process and that captures our decisions is absolutely necessary. Without this how can anyone evaluate what we have done, how can we assure that we are doing the right thing. How can the product be tested. The important thing is to develop quality documentation. Just as oftentimes the software developed is referred to as a ‘big ball of mud’ or as ‘spaghetti code’, much of the documentation I have seen can be similarly described. I have seen requirements documents that are so convoluted that I wondered what the real requirements were. I have seen design documents that were almost more complex and confusing than the resulting code. Documentation is absolutely necessary for large complex systems and for systems that will have a long life. Quality documents act as a road map for the maintenance of the system, whether this be for bug fixing or for modification or enhancement.

I am sorry for this diatribe, but as I have said for many years, we as software professionals have the knowledge, tools, methods and techniques to develop quality software, on time and within budget. We just don’t do it. It is in the technical execution of developing our systems that we fall down. It is time that we realized that new processes, or software management does not have the answers, but it comes down to performing excellent technical execution, from requirements, to design, to code and to testing, with attendant excellent documentation.

Vince Peterson

Software Consultant

Thanks for your comment, Vince! I agree that we, professional software developers, should focus on the “technical execution”. One important aspect is deciding what are the right methodologies and tools for a specific project. We should be able to classify software systems in the same way civil engineers classify construction projects. It is clear that building a house is different from building a road or a bridge, but in our case building a Web site, a smartphone app or a complex real-time system are all being treated as “software development”.

hello all of you ,thank you very much to explain all those things about software and engineering

i am beginner ,and basically i have knowledge in .net and database ,whatever you guys talking about it is helping me sometime

Pingback: The End of Agile: Death by Over-Simplification | Effective Software Design

I think the quality aspects of software delivery in terms of ensuring technical debt reduction, cohesiveness and coupling reduction etc. are to be embedded in the delivery process or done critieria. So when a burndown chart indicates progress, it should assume that the code is delivered after passing automated QA / static analysis rules thru PMD etc. through Continuous Integration.

A lot of good points, but I lack some concrete suggestions. You mention a couple of times that quality of implementation is never measured. How would you suggest measuring quality? How do you know that your coupling has become too tight?

I agree that scrum has been good at selling itself through rituals, but what are the rituals that software engineering should adopt?

I ask because as a software architect I struggle with these questions.

Thanks for your comment Jacob. There is a great diversity of software quality metrics in usage, including complexity, cohesion and coupling, and many of these metrics can be collected through static code analysis tools.

I think the most important “ritual” for software developers is the Design Review. I discuss it in some of my previous posts:

Those are some good articles. I am fully aware of metrics like coupling and complexity, I even wrote my own analyser. These are good to give indicators for problems with maintainability, but how do I know that my coupling has crossed the threshold and become too tight? I am looking for a way to turn the metrics into leading indicators and not lagging.

I am also struggling with proving other quality attributes. Performance can obviously be proven by testing, but that kind of testing tends to prove that it is good enough, not that you have a performance that also scales, or that you have thought of alternatives (as you mention in the post you reference).

I guess it always comes back to ‘it depends’ and I see the ‘rituals’ as a good way of making sure that thought has actually been given to a particular question.

Hayim stated that “There is a great diversity of software quality metrics in usage, including complexity, coupling and cohesion …” I would argue that these are not software quality metrics. In my opinion they are coding metrics only and software is much more than the coding.

Also I would argue that they are not quality metrics. In 1985 at a software conference in Seattle I sat on a panel on “Software Metrics”. I made the statement that all software metrics that I was aware of were measures of software badness, not software goodness. My opinion has not changed since then.

The metrics such as complexity, coupling and cohesion are good for raising red flags to the presence of potentially bad good, but do not assure that the software is good. Is the design of the system a quality design? These metrics do not tell us. I also offered a challenge. Give me a problem to solve, tell me what metrics you will measure my solution against, and I will deliver a terrible solution that will pass all the metrics. In other words, the metrics measure badness not goodness.

One real example of this is the use of the McCabe complexity measure which is currently used in many places as a measure of software quality. This metric measures the number of paths through a closed subroutine. The number of paths is a measure of the number of test cases to fully test the routine and hence a measure of complexity. One can take a routine that has a high complexity and simply cut it into pieces that solve parts of the original routine, link them through call statement and the resulting routines will haves low measures of complexity. This is not necessarily good design.

In other words, metrics raise red flags but do not assure quality software. As Hayim says, peer reviews and design reviews are the best place to assure quality.. This is because the true quality of a software system requires the human mind to measure that quality of the requirements, the quality of the design and the quality of the implemented code not simple metrics.

I am not saying that we should not use metrics. They are valuable for showing possible problems. But I am saying that metrics by themselves to not assure quality. Use them, but also use the human mind to assure true quality.

One final example is the measure that has been recently in vogue called “requirements volatility”. This measure determines the change in requirements, which as we all know affects software development. Low volatility is good and high volatility is bad. The problem that I see with using this metric is the lack of understanding as to the definition of software requirements. I have seen requirements written at such a high level of abstraction that when the true software requirements are changed (perhaps by a control laws engineer) it has no impact on the “software requirements” and hence the volatility falsely appears low. In other cases the software requirements were so detailed that they actually included design level decisions, In this case a change in the design (perhaps through refactoring) caused the requirements documents to change and the volatility falsely appeared high.

We need our brains to properly utilize metrics and to assure that the software developed has the best quality. It is time to realize that there is an art to software development and this art exist in the mind and not simply in metrics. Use metrics but use them intelligently and with well thought out development of requirements, design, code and testing.

Vince, I am completely in line with you here. At work we also use software metrics strictly as an indicator of possible issues. Like you say, people will meet metrics, whatever they may be – Goodhart’s law. We also try to collect a wide range of metrics so that it becomes impossible to game them all without actually doing something right. Furthermore the result of code scans are explicitly not shared with developers, exactly because of Goodhart’s law. Architects and lead engineers review them to understand what are the underlying problems that they may point to.

Regarding volatility metrics, then I think that should be seen in connection with code volatility. Check code volatility instead. If you assume that code is supposed to do one thing, and one thing only then it should be possible to code it once to meet a specific requirement. If the requirement changes, then the code may need to change (or be thrown away). This may be legitimate, but may also be an indicator that there was a problem with the requirement. If the code changes without the requirement changing, then it should indicate a problem with the understanding of the requirement (or worse, a problem with the code). In both cases it is worth taking a closer look because very often high complexity comes from continually adding conditions (“ah, but in these circumstances it should behave slightly different”)

As for your statements about complexity, then I see it related to requirements and how a system is modeled. If the requirements are reasonably stable, then you will not have these additional conditions to cater for. In that case high complexity is an indicator that there is something wrong with how the system is modeled or how classes have been defined.

When it comes to problems with modeling or architecture, then it is a real problem that metrics are lagging indicators. This is why I think there is some value to trying to define expectations to code metrics in the design so that if the expectations are violated a red flag is immediately raised so the problem does not just surface at a much later point.

Pingback: Re-Post: The End of Agile: Death by Over-Simplification | Youry's Blog

One thing that I have not so far seen mentioned in any articles on Agile is the effect of the quality of the codebase that is used. By this I mean it’s design/engineering and implementation. It is rare now to start a project from scratch so most consist of modifications and/or enhancements to existing suites of software.

If the codebase is good (don’t bother asking me to define good – just talk to the developers) then the average story estimation time will be accurate and everything should fall in to place.

However, if this is not the case then stories/sprints/deliveries will overrun, the product backlog will not come down at the desired rate, the problem list will grow and generally be ignored, developer morale will plummet and product quality will degrade even further. Engineering is now no longer important, as after all it will be delivered anyway as Managers are under pressure to get it out the door.

So I would suggest if you are thinking of adopting Agile as a solution to your problems first find out the true state of the codebase that will be used. If it is ‘good’ then Agile may help (but then why do you need it?) but it could result in the codebase degrading as functionality is flung in at an increased rate.

If however it is ‘bad’ then adopting Agile will not help, you could however implement it (including adding a few people that are ‘passionate’ about it) and adopt the delusion that it is working.

Pingback: Indefinite Optimism: the Problem with the Agile and Lean Mindsets | Effective Software Design

Hi

I know that project management is not a software engineering. There are the lots of points you explain well. This is the moment that growup as engineers & develops the proper needs, design, coding and testing run and might be will see actual improvement in our vocation.